Illustration: Michael Haddad

What is mixing when it comes to the world of audio?

Put simply, mixing is the process of enhancing and blending together the individual elements that make up our song. While composition is all about making cool rhythms, memorable melodies, and colorful chord progressions, mixing places the focus on more elusive sonic aspects like making the whole picture sound clear, exciting, and dynamic.

While there’s no use in putting lipstick on a pig (i.e. an awesome mix can’t ‘save’ a bad composition), the value of mixing can’t be understated: a great mix not only helps create a commercial-sounding end product, but also plays a key role in elevating the emotional impact of our track.

In this article, let’s learn how to mix music by exploring the three key processes that constitute mixing: balancing levels, panning, and applying audio effects.

Feel free to use the table of contents below to easily navigate from section to section:

What you’ll learn:

- Balancing levels

- Balancing the stereo image

- Using audio plugins

- What is EQ?

- What is delay?

- What is reverb?

- What is compression?

- Conclusion

Feeling ready? Let’s dive into the world of mixing!

Balancing levels

If everything is loud, nothing is loud—balancing the level, or loudness, of individual elements in your track is one of the most fundamental steps in mixing. There are two main ways to balance levels in a DAW: using a fader and via automation. Let’s explore each method and their pros and cons.

Using a fader

In the timeline / arrangement view of most DAWs, you’ll see a fader that looks something like this next to each of your tracks:

A track fader in Logic Pro X

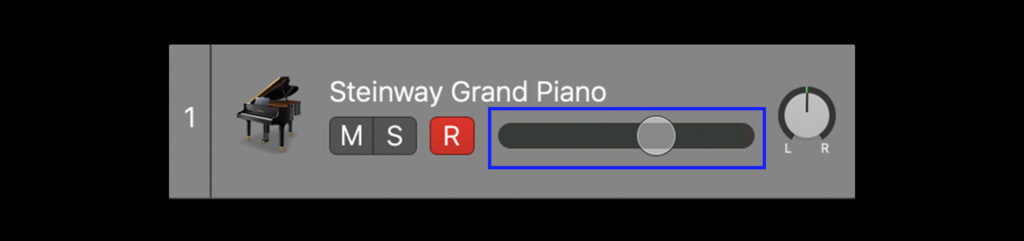

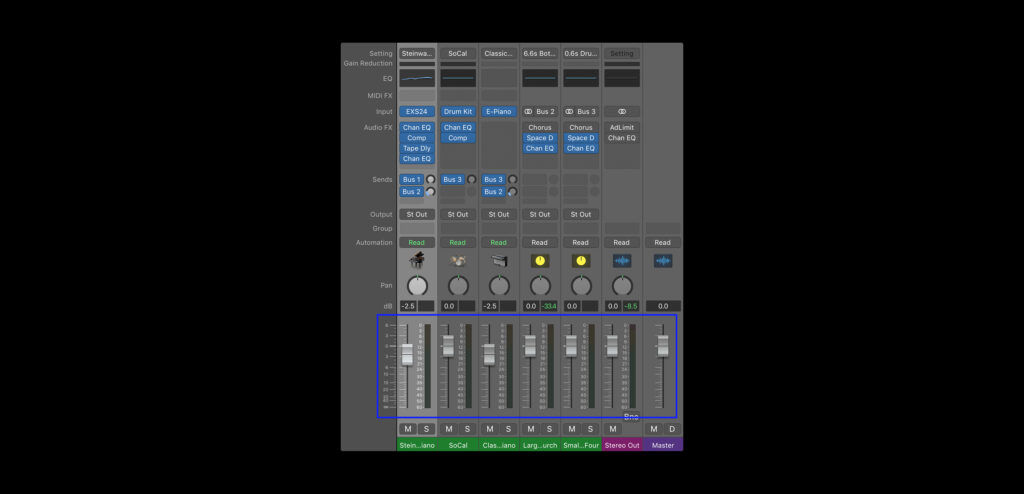

You may also see your faders laid out in a dedicated mixer view, which typically looks something like this:

The faders in the mixer view of Logic Pro X

If you click and drag the fader in either direction, you’ll see a value expressed in decibels (dB) go up or down accordingly. Decibels are the unit used to express level, and in most DAWs, a new track’s fader will be set to 0.0 dB by default. If you play your song as you adjust the fader, you’ll hear the track’s volume change in real time:

Try adjusting the fader until the track seems to be at the right volume in relation to the rest of the mix. Faders are particularly useful for quickly and easily setting the single, unchanging level of an individual track, and for many elements of your mix, the fader alone will do just fine.

However, there may be a few components of your mix that need to change in volume depending on the part of the song. For example, maybe the guitars are a soft background element during the verses, but amp up in loudness during the chorus. Now, you could create separate tracks for the ‘verse guitar’ and ‘chorus guitar,’ and set their levels independently. However, another common solution would be to use automation.

How to use automation when mixing music

As the name suggests, automation is a technique that allows you to automatically adjust a specific parameter (in our case, level) across time. All common DAWs have some form of an automation curve feature, which allows you to adjust a parameter at specific moments in time by drawing in points and lines that vertically represent different values. Conveniently, the parameter that these features display by default will most likely be track volume.

Below, see how an automation edit allows us to change the volume midway through the drum part. Notice how the fader moves accordingly without us having to touch it:

Another thing that automation curves can be used for is to create fade-ins and fade-outs, which can be done by stretching out the distance between two points:

While automation curves are extremely helpful in mixing, do note that once you create some points, you won’t be able to use the fader (outside of during real-time playback) to set levels, as it’ll snap to whatever points you created. While there’s a workaround to this that we’ll touch on in a bit, generally use automation selectively and with intention for this reason.

Thinking about levels in mixing

While the concept of adjusting volume is relatively simple, getting the levels right is really half the battle (if not more) when it comes to mixing. When balancing the levels of your tracks, think about what you want to be the main focal point of your mix.

For a pop record, maybe it’s the vocals; for a house track, it might be the kick drum. A piano may be a background element during the majority of a song, but maybe it needs to come to the forefront for a solo halfway into the track. No matter what it may be, identify your priority, and then set the rest of the levels in relation to it.

The curse of clipping

A key thing to keep in mind as you set levels is the master fader. In every DAW, you’ll find this one fader that displays the combined level of all of your tracks. As a general rule, make sure that the level displayed on this fader never exceeds 0.0 dB, since that’s the upper limit for how high you can push your level before the audio starts clipping, or distorting (essentially, you’ll get noises in your audio that are—outside of very niche artistic circumstances—unwanted).

Advanced techniques

Faders and automation curves are truly all you need to set levels. That said, while we won’t be covering them in detail for this introductory piece, there are a few more advanced techniques that can be explored as you continue to learn how to mix music.

One is assigning a gain plugin (we’ll get to the concept of audio plugins a bit later) to a track, and automating it instead of your fader. This allows you to create curves, while still keeping the fader available at your fingertips for adjusting the overall level (including any automation). Another technique is editing the clip gain of audio clips, which is another way to dynamically change levels without touching your fader, or even drawing curves for that matter.

Don’t worry too much about these techniques for now, as you’ll naturally come across them as you progress in your mixing journey; just know that there are more neat tricks out there that can be explored if desired.

Balancing the stereo image

Another key aspect of mixing is arranging the stereo image, or the balance of the perceived spatial locations all of your sounds are coming from. When you listen to your favorite song, you’ll probably notice that certain sounds are coming from the left or right side of your speakers / headphones, while others seem to be hitting you from both sides equally (or from what perceptually feels like ‘the middle’).

These are decisions that the mixing engineer made, often with one of two broad intentions: (1) to create a sense of spaciousness and clarity in the mix, or (2) to achieve some sort of creative effect (ex. maybe a drum fill moves from the left side of the mix to the right side to add excitement and simulate the drummer moving across the kit).

When you create new tracks in your own project, you may have noticed that they’re all placed in the center of the stereo image by default. Even if the levels are balanced, if every track is sitting in the center, things can start to feel two-dimensional and crowded; you might find that it’s hard to make out the lyrics, the synth solo might get drowned out by the piano, etc.

What is panning?

Panning is the technique we use to assign individual tracks to specific positions in our stereo image. We can pan tracks to the ‘hard left’ (i.e. 100% of the audio comes from the left speaker), the ‘hard right’ (i.e. 100% of the audio comes from the right speaker), the ‘center’ (i.e. the signal is equally distributed to the left and right speakers), or anywhere in between (achieved by having more signal on one side than the other, but some amount is reproduced on both).

Generally, you’ll probably keep a few things in the center, and the rest of the tracks will be somewhere between the center and the hard left or hard right.

How to use panning when mixing music

In order to set the panning of a sound in our mix, we use a tool called the pan knob. In most DAWs, you’ll find this near the fader.

Simply turn the knob in either direction, and as you do, you’ll hear the sound move across the stereo image.

General guidelines for panning

Like most things, panning should be used creatively to help convey whatever you want to achieve with your music. That said, while there are always exceptions, below are a few guidelines to follow if you aren’t sure where to get started.

- Balance is king: In most cases, we want roughly the same amount of overall level coming from both sides of our mix, even if different instruments live on each side. Therefore, work with an overall sense of equilibrium in mind—for example, if a guitar and synth are both outlining a chord progression, maybe you can try panning one to the left and the other to the right by an equal amount to let each instrument breathe, while still maintaining a sense of balance. While you might not use them at first, there are also utility tools like correlation meters in most DAWs that give you a numerical sense of how balanced your stereo image is.

- Some elements tend to stay in the middle: Vocals, the kick, the snare, and the bass tend to be left in the center (or very close to it). This is because these are usually elements that ground us in a song, and it can feel odd to have them coming from just one side.

- Other elements are panned more often: On the other hand, guitars, synths, pianos, and keys are quite often panned. In fact, if you’re using a piano software instrument, you’ll often find that it has a degree of auto-panning, with the higher notes sounding on the right and the lower notes sounding on the left. This is also true for a lot of drum software instruments—while the kick and the snare will often be in the center, you may find that the hi-hats, cymbals, and toms are pre-panned to simulate their location in a real kit.

- Hard panning makes a statement: Hard panning is definitely used, but is generally used sparingly. This is because it can sometimes be a little jarring if executed poorly.

Advanced techniques

The best way to get better at panning is to simply listen to a lot of music you enjoy, and take note of what you hear. You may find that you either over-pan or under-pan at first, but you’ll soon hit a stride and before you know it, you’ll be making adjustments to the stereo image on the fly.

Like level, there are a few advanced techniques that can be explored, even if we don’t cover them in-depth today, such as widening (using psychoacoustic tricks to make your sounds feel ‘wider,’ even if they’re perceptually in the middle), automation (we can employ our new friend on panning as well to create a sense of movement), and stereo tremolo (an audio effect that can be used to create musically-timed movements in panning).

With balancing levels and the stereo image covered, let’s dive into the next concept: using audio plugins.

Using audio plugins for mixing music

Both in music production and in the world of software at large, plugins are devices that extend the functionality of a program. In music production, audio plugins are devices (that either come with the DAW or can be purchased and installed from a third party) that serve one of these specific functions:

- Software instruments: Plugins that allow you to create music with virtual instruments (you may already be using these if you have synths or instrument emulations that are playing your MIDI melodies, drum grooves, or chord progressions for you).

- Effect plugins: Plugins that don’t perform the music, but instead apply some sort of processing on your tracks to alter their sound.

- Utility plugins: Plugins that don’t apply processing, but aid you in some other way through providing visual metering, control over gain, etc.

When mixing music, we’ll largely be relying on the latter two: effect and utility plugins. Today, we’ll specifically be focusing on four effect plugins types that are used particularly often: EQ, delay, reverb, and compression.

How to add plugins in your DAW

Before we dive into each, note that all DAWs are pretty much guaranteed to have some built-in version of these four effects—see below for how to load them up in the most popular workstations:

With that covered, let’s dive into some of the effects we mentioned above in more detail, starting with EQ.

What is EQ?

EQ (a shorthand for equalization) is an incredibly useful tool in music production that allows us to control the balance of frequencies. Even if you’ve never used it in the music creation process, there’s a good chance you’ve played with some form of EQ before, whether it’s the bass and treble knobs on a stereo system or the equalizer settings on a streaming service or your phone’s music app.

What are frequencies in audio?

When we’re creating our own music, we think about rhythms, pitches, and chords. However, when we mix—and specifically use EQ—we’re often shifting gears and thinking more about the balance of frequencies, which are the actual vibrations that we hear.

Frequencies are measured by their cycles per second (essentially their speed), and are expressed via the unit hertz (Hz). While it can vary from person to person, the typical range of hearing for humans is 20 Hz – 20 kHz (20,000 Hz). When we hear a complex sound like the strumming of an electric guitar, there’s not just one or two of these frequencies at play—rather, there’s an intricate mix of countless frequencies within this wide range that make up the timbre, or sonic character, of the sound.

As stated in the beginning, what EQ allows us to do is adjust the balance of these frequencies to get the desired timbre we’re looking for. In other words, when we use EQ, we’re not making things lower or higher in pitch. Rather, we’re typically using it to make things sound brighter, bassier, clearer, etc.

What EQ looks like

Most DAWs come with a multi-band EQ plugin that looks something like this:

The Channel EQ in Logic Pro X

The x-axis represents frequency—we see that this EQ (and most EQs) covers the full range of hearing we mentioned earlier, 20 Hz – 20 kHz. The y-axis is decibels, which we covered when talking about levels. Taking these two axes into consideration, what an EQ essentially provides us is volume faders across the full frequency spectrum; we can use it to draw in boosts and cuts where we want to increase or decrease the level of certain frequency ranges.

What EQ sounds like

A listen is worth a thousand words. Let’s take a listen to how adjustments in EQ impact the sound of our drums from before in real time:

How to use EQ when mixing music

There are no rules for how to use EQ in mixing, and the best way to get good at it is to experiment with it firsthand. That said, we’ve highlighted some ideas below for how you might use EQ in your mix:

- To correct something: EQ is often used to bring out the best of a performance and to attenuate the less desirable elements. If your vocal performance lacks definition, you can give the higher frequencies a boost to make it feel more clear and crisp. On the other hand, if it sounds too harsh or hissy, you can reduce these same frequencies for a more well-rounded sound.

- To create space for something else: In general, instruments will sound clearer in your mix if they’re not competing for attention with a million other elements that mainly reside in the same frequency range. If you have a bass guitar and a kick drum, for example, you can use EQ to boost and cut different frequency ranges within the lower end (by just a few decibels at most—no need to overdo it) so that each sound has its own sonic range where it can shine.

- To achieve a creative effect: EQ can also be used in more extreme ways for creative purposes; for example, cutting out all frequencies below 2 kHz can make your audio sound like it’s playing out of a cheap radio, while if you cut everything above it, it can feel like you’re listening to something underwater. We can even use automation to change parameters over time, to simulate something being gradually brightened or drowned out.

- To achieve balance: For the most part, mixes sound the best when they have some content across our full range of hearing. We often use EQ with this macro perspective in mind, making sure our overall track sounds full and engaging.

What is delay?

Next up is delay, an effect that’s simple in principle, yet incredibly powerful and versatile. In short, delay takes a piece of audio, holds onto it, and then plays it back after a specified amount of time. The application of this effect that we’re likely the most familiar with is echo, a type of delay where the output is fed back into the input multiple times, with the volume decreasing after each iteration.

What delay sounds like

Here’s what a vocal sample sounds like without any delay:

…And here’s what it sounds like after some delay is applied:

The parameters of delay

When you use a delay plugin, you might come across a number of parameters. Here’s what each one means:

- Delay time: This value determines how long it takes for a single delay to occur, typically expressed in either milliseconds (ms) or beat divisions (1/4, 1/8, 1/16, 1/32, etc) that sync with your project’s BPM.

- Feedback: This is the percentage of the signal that you’d like to put back into the input of your delay after each iteration—the more feedback, the greater the number of echoes, and the more gradually they’ll fade.

- Mix: The mix describes the balance between the ‘dry‘ (the original audio) and ‘wet‘ signals (the effected audio). If ‘dry’ is set to 0%, we’ll only hear the delays, and if ‘wet’ is set to 0%, we’ll only hear the original audio.

- Low cut / high cut: These parameters let you EQ low or high frequencies out of your delayed signal.

How to use delay when mixing music

When you’re first using a delay, you might want to keep the following things in mind:

- Watch the feedback: While we typically expect echoes to fade over time, if the feedback percentage is higher than 100%, the delays will actually get louder than the original audio. This can create an infinite loop where things just keep getting louder and louder (until you cut the audio off), so unless you’re looking for that effect, make sure you keep the percentage below 100%.

- Be selective: Try to resist the urge to add delay to everything, as if used excessively, it can reduce the clarity in your mix. A little bit of delay goes a long way.

- Get creative: Delays can be used to achieve really neat musical effects like call-and-responses, rhythmic patterns, and harmonies. Don’t be afraid to experiment and have fun with your delays!

What is reverb?

Reverb is one of the most popular and adored effects out there, that’s actually just a specific kind of delay. With reverb, the delays are so close together in time and so densely packed together that you can’t tell them apart from each other (unlike an echo), which creates the lush perceptual effect of a sound just lingering a bit longer in space.

We hear reverb in the real world every day. Most of our surroundings reflect sound, and at any given moment we’re bombarded by these sonic reflections. If we stand in an empty hall and clap, we hear a mixture of direct sound (sound that travels straight to your ears) and reflected sound (sound that radiates, bounces off of walls and other objects, and travels back to your ears). The mixture of these reflections is the reverb, and our brains interpret it to perceive the nature of the space we’re in. Thus, we mostly use reverb in our mixes to simulate spaces (although there are plenty of other creative uses for it).

What reverb sounds like

Here’s the same sample from before, which also happens to have no reverb:

…And here’s what it sounds like with reverb:

The parameters of reverb

Although there are many types of reverbs out there, they tend to share a few common core parameters:

- Decay: Also known as the reverb time, RT60, or reverb tail, this parameter tells you how long it takes for a reverb to fade by a certain level (60 dB). The longer the decay time, the longer you’ll hear the reverb linger in your mix.

- Early reflections: Early reflections are the initial group of reflections that happen when sound waves hit an object. More pronounced early reflections will perceptually place your listener in a small room, while a lower early reflection level will place your listener further away in your virtual space.

- Pre-delay: The pre-delay is how long it takes for a sound to leave its source and create its first reflection. A reverb with a significant pre-delay will cause the listener to feel like they’re in a large space.

Also note that a lot of reverb plugins also have low cut / high cut and mix parameters, which do the same thing they do in delays.

How to use reverb when mixing music

When you’re first using reverb as you learn how to mix, you might want to keep the following things in mind:

- Be selective: Like delays, excessive reverb can reduce the clarity in your mix. Something might sound amazing with tons of reverb on it in isolation, but always keep the full song in mind, since that’s what really matters at the end of the day.

- Don’t use too many different reverbs: As we mentioned earlier, reverbs help us simulate a virtual space. For this reason, things will (generally) sound more consistent if you stick to using no more than a few different reverbs in your mix.

- Auxiliary tracks: This is a more advanced technique that we won’t dive into today, but know that you can preserve CPU resources by sending a bunch of tracks to one auxiliary track that contains your reverb plugin. This is less important for beginners, but it’ll become a very valuable technique as your projects’ track counts increase.

What is compression?

The last effect we’ll be covering today is compression. This one is definitely the most complex out of the four, so don’t feel overwhelmed if every aspect doesn’t make sense right away. Put simply, compression makes a track more consistent in level. More specifically, it reduces the overall dynamic range (the difference between the loudest and softest elements) of a piece of audio by detecting when it goes above a specified level, and then bringing it down by a specified amount only when that threshold is exceeded.

What compression sounds like

As always, taking a listen will help us better understand the written definition.

Here’s what a sample sounds like without any compression applied:

…And here’s what it sounds like after some compression:

What compression looks like

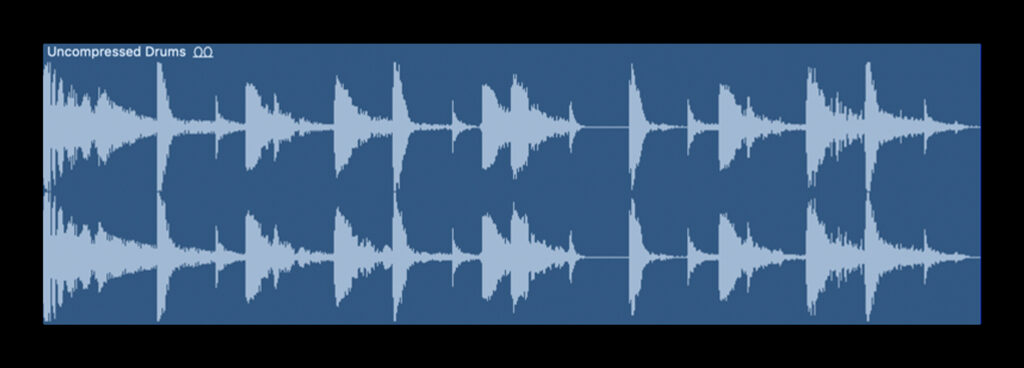

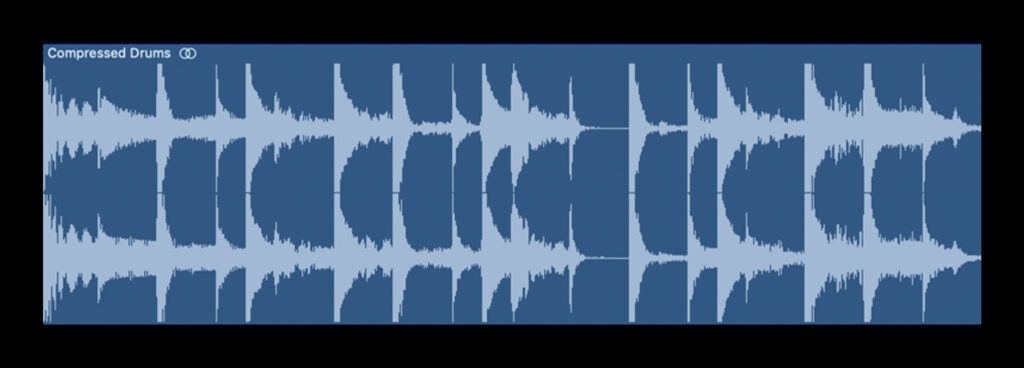

Compared to the other effects we explored, compression might feel a lot more subtle since differences in dynamic range are often harder to hear when compared to things like delay and reverb. However, if we listen carefully, we can hear that while there’s a significant difference in level between the hi-hats and the kick / snare in the uncompressed groove, all of the elements are much more even in the compressed version. This is visually reinforced when we compare the two waveforms:

The uncompressed drum groove—there’s a wide range between the louder elements (when the kicks / snares are sounding) and the soft elements (when only the hi-hats are sounding)

The compressed drum groove—the level of the hi-hats are now louder and closer to the level of the kicks and snares

The parameters of compression

Though there are always exceptions, most compressors (software or hardware that applies compression) share a common set of parameters:

- Threshold: A compressor’s threshold determines the level (in decibels) at which the compressor starts compressing. The threshold can be set strategically to target specific moments in your audio—for example, if a drum part’s snare is too loud, you can set the threshold so that it’s low enough that the snare triggers it, but high enough that the rest of the kit is bypassed when the snare isn’t sounding.

- Ratio: The ratio determines how much the level is brought down, once the signal exceeds the threshold. As an example, if the threshold is set to -20 dB, the input signal hits -16 dB, and the ratio is set to 2:1, the output signal will be attenuated to -18 dB. Because the input surpassed the threshold by 4 dB, the 2:1 ratio cuts this value in half, resulting in the output of -18 dB. Essentially, the lower the threshold and the higher the ratio, the more pronounced your compression will be.

- Attack / release: The attack, measured in milliseconds, determines how quickly the compressor pulls the input signal down to the full ratio value after it exceeds the threshold. The release is the opposite, representing the total time it takes for the signal to return to an uncompressed state.

- Make up gain: Most compressors have a make up gain knob that allows us to turn our sound back up in volume, since compression inherently reduces level.

How to use compression when mixing music

Again, if compression feels significantly more complex than the other effects we explored, don’t worry—that’s because it is! If you don’t use it in your work at first, that’s totally okay, but we cover it here because in reality, it’s one of the most commonly-used effects in music production. Below are just some of the many reasons musicians reach for compression:

- To ‘glue’ elements together: If a sound is sticking out from the rest of the mix, a bit of compression can often help make things feel more blended together with the whole.

- To make volume automation easier: When defining threshold, we mentioned a specific example involving a snare in a drum groove. Using compression to automatically handle something like this can save us loads of time and headache in comparison to trying to manually draw in level automation curves each time the snare hits.

- To make things louder: This might seem a little counterintuitive, since compression by definition makes things softer. However, once we use the make up gain to bring up the level, we’ll actually be able to reach levels that are overall louder than before; because we tamed the peaks in our waveform, we can push the overall audio to higher levels before we run into distortion.

An introduction to mixing: Conclusion

The world of mixing is extremely deep, and there’s always more to learn. For example, while we covered four effects that are used to some extent in almost every production, there are a million other effects that are also used often, though more situationally. That said, less is more (especially when you’re first learning how to mix music), and this article should supply you with all of the most key tools you need in order to achieve a great mix.

As you make more music, you’ll probably start to find yourself doing some mixing as you write, balancing some levels and adding an effect or two on the fly. Although some may advocate for this mix-as-you-go approach while others will opt to save the bulk of it for a dedicated session (or pass of their song to a professional mixing engineer), know that there’s no wrong way to approach mixing—don’t be afraid to iterate, experiment, and have fun!

If you’re reading this article as part of your journey towards creating your first track, go back to the curriculum that corresponds with your DAW and proceed to the next step:

July 19, 2022